The emergence of AI-generated images

Through the progress of DALL·E 2, MidJourney and Stable Diffusion are entering a new age of image generation driven by artificial intelligence.

Experiments with MidJourney and Stable Diffusion

Through the progress of DALL·E 2[1], MidJourney[2] and Stable Diffusion[3] are entering a new age of image generation driven by artificial intelligence. All these technologies take a text prompt and transform it into a picture of what you are looking for. The research community refer to this as "diffusion-based models"[4]—an artificial intelligence technology that can create incredibly creative and realistic images.

While DALL·E 2 and MidJourney are closed solutions, Stable Diffusion is open[5]—offering the model weights and the complete source code. The openness of Stable Diffusion allows researchers to explore the technology in more detail and adjust the internal parameters.

I am deeply impressed by these new AI-based technologies, allowing humans to dream and explore new ideas. They enable a completely different design process for artistic professions.

- A painter can use the tool to get ideas for specialised painting.

- An interior designer can use these tools to get ideas for living room designs based on some input from their clients.

- Graphic designers can use the tool to generate posters faster and more specific. These new technologies even can create collages for several pictures based on text input and blend these images into each other with image inpainting. The results are spectacular.

- A fashion designer can catch new ideas and inspiration for new clothes faster and more diverse.

- Humans can use these tools to generate the art they inspire—the democratisation of art. The possibilities are endless!

As described by Benji Edwards in his article[6]—reactions are divided. Some artists are delighted by the prospect, while others are unhappy. Most of society is largely unaware of these rapidly evolving technologies.

AI-based image generation is getting more available and more democratised from here on. The technologies are not yet perfect in all aspects, but imagine the progress we will see one year from now.

Following, you will find examples of the models with additional resources.

MidJourney

As with all other artificial intelligence models in this article, MidJourney produces images from textual description. The program is in open beta and started in July 2022. MidJourney uses a freemium business model, with a limited free tier and paid tiers that offer faster access, greater capacity and additional features. Users use Discord bot commands to communicate with MidJourney.

In this video, Scott Detweiler[7] introduces MidJourney and shows its potential as an art creation tool. He shows how to communicate with the Discord bot and gives first tips and tricks. He provides more specialised videos on MidJourney on his video channels[8].

Please go to the MidJourney homepage[9] to join the open beta. The homepage also provides first information and community showcases.

The following collage shows portrait pictures I generated by myself or took from the community showcase page[10]. The first portrait shows Ernest Hemingway, while all humans in the other images do not exist and are artificially generated using text prompts.

The text prompt «colour portrait of Ernest Hemingway on a sail boat in the bahamas, hyper realistic, 4k, ultra-detailed, nikon» was used to generate the first picture.

To generate the last picture, a more complex prompt was used:

«a portrait of a handsome WW2 soldier sitting with on the beach at Normandy. He is a perfect mix of John Hamm, Brad Pitt, Jim Morrison and Ashton Kutcher, with bright hazel-green eyes, wearing a stunning futuristic 3d-printed soldier garment and a highly intricate 3d-printed futuristic carved helmet-crown with ornately carved ivory fangs, tribal paint, hyper-detailed gold filigree and fangtooth bejewelled oracle epaullets. The background is ethereal, magical, dark atmosphere. Spectral cosmic dust and light permeates the cinematic battlefield portrait, dynamic, hyperrealistic, fine details, spiritualcore, amazing backlighting, extreme detail, symmetrical facial features, elegant, elaborate, hyperrealism, hyper detailed, strong expressiveness and emotionality»

MidJourney can also be used to generate rate painting of specific content. The following examples show pictures I made, based on two Filipino girls, eight and 19 years old, collecting shells at the beach, playing in the rice fields or dancing in traditional clothes.

Another excellent example of MidJourney’s future is to use the tool as inspiration within creative professions. Here, I used the tool to generate nordic-style living rooms for apartments in Germany with grey and mustard colours.

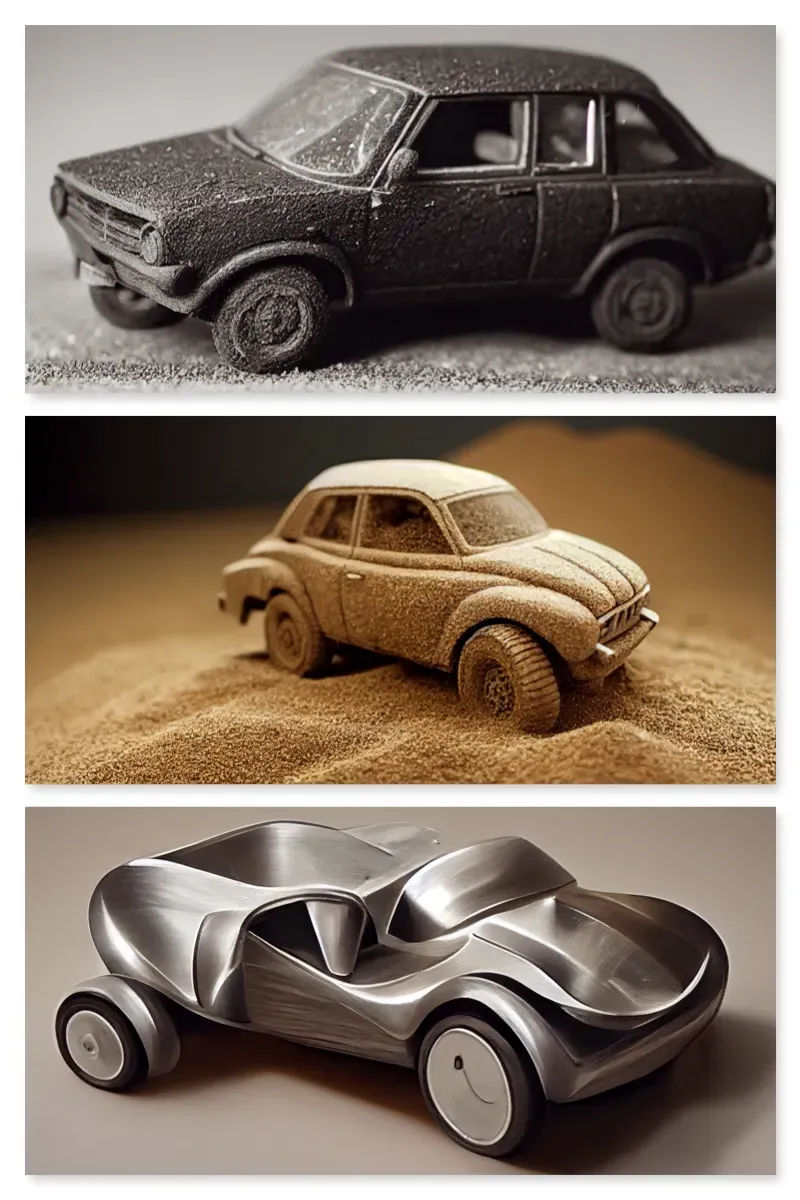

MidJourney can also generate toy cars made out of different materials. Here, I made toy cars out of basalt, stone and aluminium.

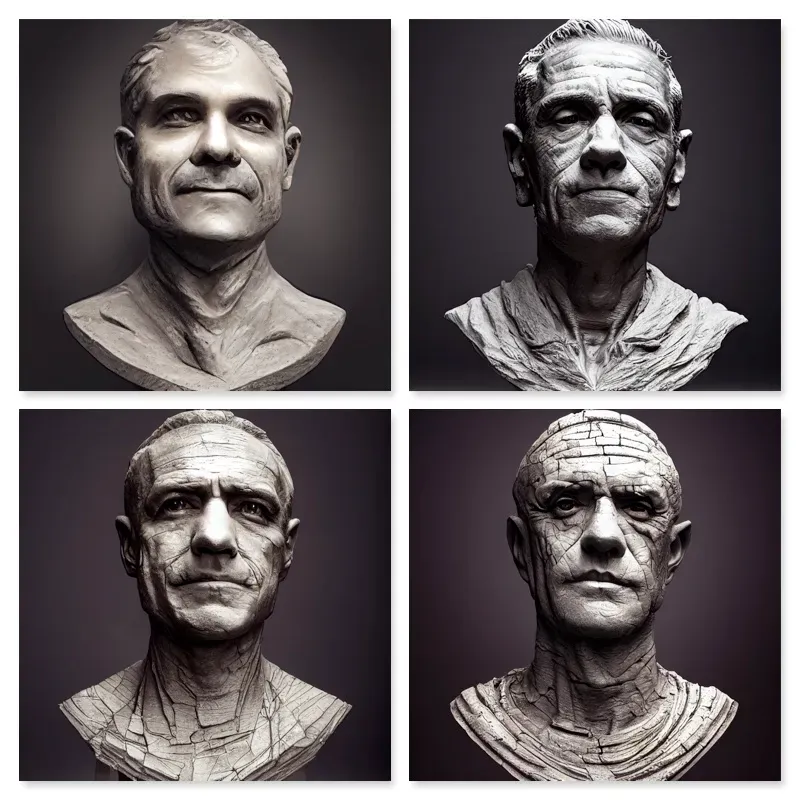

In my last example of MidJourney, I generated busts, of a middle-aged man, based on my LinkedIn profile picture, with different ageing levels of the bust (not the middle-aged man).

The possibilities are endless—MidJourney is very powerful. The teapot guide[11] by Rex Wang provides a comprehensive overview of possible prompts based on the Utah teapot.

Stable Diffusion

The most significant advantage of Stable Diffusion is that the model weights and its source code is open source. With basic computer skills, you can install it on your GPU-powered laptop or Desktop computer[12]. You can do experiments for free on HuggingFace's Stable Diffusion Demo[13] or by using Stability AI's DreamStudio[14]. The following collage shows generated paintings of nuclear explosions painted by past artists. The theoretical market value for training Stable Diffusion is 600,000 USD.

Dall·E 2

One of the most substantial advantages of diffusion-based models is the capability to do in-painting—a conservation process where damaged, deteriorated or missing artwork parts are filled in to present a complete image.

Using the new Outpainting capability of Dall·E 2, Orb Amsterdam asked OpenAI to help them imagine how the landscape could look like between famous impressionist paintings from Van Gogh, Monet, Munch and Hokusai[15].

While I am still on the waiting list to get my Dall·E 2 access, the prompt book is already auspicious.

Remember, the future is already here; it is just not evenly distributed.

Dall·E 2, https://openai.com/dall-e-2/ ↩︎

MidJourney, https://www.midjourney.com/home/ ↩︎

Stable Diffusion Public Release, https://stability.ai/blog/stable-diffusion-public-release ↩︎

What are Diffusion Models?, https://lilianweng.github.io/posts/2021-07-11-diffusion-models/ ↩︎

Source Code repository of Stable Diffusion, https://github.com/CompVis/stable-diffusion ↩︎

With Stable Diffusion, you may never believe what you see online again, https://arstechnica.com/information-technology/2022/09/with-stable-diffusion-you-may-never-believe-what-you-see-online-again/ ↩︎

Scott Detweiler, https://www.sedetweiler.com/ ↩︎

Scott DetweilDetweiler'se channel, https://www.youtube.com/c/ScottDetweiler/videos ↩︎

MidJourney, https://www.midjourney.com/home/ ↩︎

MidJourney Community Showcase, https://www.midjourney.com/showcase/ ↩︎

Understanding MidJourney (and Stable Diffusion) through teapots, https://rexwang8.github.io/resource/ai/teapot ↩︎

How to Run Stable Diffusion on Your PC to Generate AI Images, https://www.howtogeek.com/830179/how-to-run-stable-diffusion-on-your-pc-to-generate-ai-images/ ↩︎

Stable Diffusion Demo by Hugging Face, https://huggingface.co/spaces/stabilityai/stable-diffusion ↩︎

DreamStudio by Stability AI, https://beta.dreamstudio.ai/ ↩︎

https://twitter.com/OrbAmsterdam/status/1568200010747068417 ↩︎